I got a couple of emails about last weeks issue, asking about both communicating expectations and team processes. The most important, the most fundamental of these two are expectations. In a team without a common understanding of expectation of each other, no process in the world will matter; they’re just so many words on paper.

With team members, peers, or colleagues, we share whether our expectations are met or not via feedback.

Feedback’s vital to calibrate and communicate shared expectations. Feedback is how we acknowledge that people have done a good or great job, meeting or exceeding expectations; and it’s how we give people nudges or start a conversation when they haven’t met expectations.

The fact is we all want feedback, from our managers, our community, our peers. We’re ambitious, driven people, and we want to know where the bar set is so we can clear it; we want recognition when we do clear it; and when we miss, we want to know so we can improve. Working in an environment where you’re not getting feedback means knowing there’s a bar there, somewhere, but not being able to see or feel it, and so having any idea whether your jumps are high enough. It’s disorienting and unsettling and unsatisfying.

(This is one of the reasons technical experts find it so hard to move to management. When we’re doing our technical work, we’re constantly getting feedback of some kind, if only from the compiler or the cluster nodes or the data analysis pipelines. As a manager, the feedback we get is much fuzzier, much less frequent, and much less reproducible).

We want teams where team members feel they can rely on each other; we want teams where the team members know that their manager will help them grow, and hold them accountable for areas they need to grow. We do that by communicating shared expectations in a feedback culture, and the way we start doing that is by demonstrating how to give feedback in a helpful and respectful way.

The first important thing about good feedback is that it’s about the future. There’s an article in this week’s roundup about incident response: there, as here, the past is gone and beyond our reach. We can’t do anything about it. All we can do is learn from it, and use what we learn to incrementally improve the future. We improve the future by nudging behaviours towards those that will have the impact desired.

The second important thing about good feedback is that it’s mostly positive. Negative feedback is the hardest to give and receive, so that tends to be the focus of discussions about giving feedback - fair enough. But most of the time, your peers and team members are meeting or expectations. That’s worth calling out! It’s arguably even more important to give feedback on meeting or exceeding expectations than for failing to meet them; there’s lots of ways to fall short of the bar, but only a few ways to meet or surpass it. Just giving positive feedback regularly will have a remarkable impact, and there’s basically no reasonable amount of positive feedback that’s too much (e.g. #64, “A Simple Compliment Can Make a Big Difference”). You can give positive feedback in precisely the same way as negative feedback, and for the same reasons - to make the future incrementally brighter by encouraging people to continue the good work they’re doing.

The third important thing about good feedback is that it is specific enough to be acted on. Each piece of good feedback is about a single, discrete, action or behaviour that had some impact: good or bad, that exceeded or failed to meet expectations. It’s focussed enough that people know what to do to meet expectations in the future. “Good job on the presentation” or “it doesn’t seem like you took this work seriously” are equally useless as feedback. What is the person hearing these things supposed to do? At least “good job” briefly feels good to hear, but it doesn’t tell you anything about what you should do in the future to continue doing a good job. And not taking this seriously - should you frown more when working on similar work next time? Spend more time on it? Which part of it? And why?

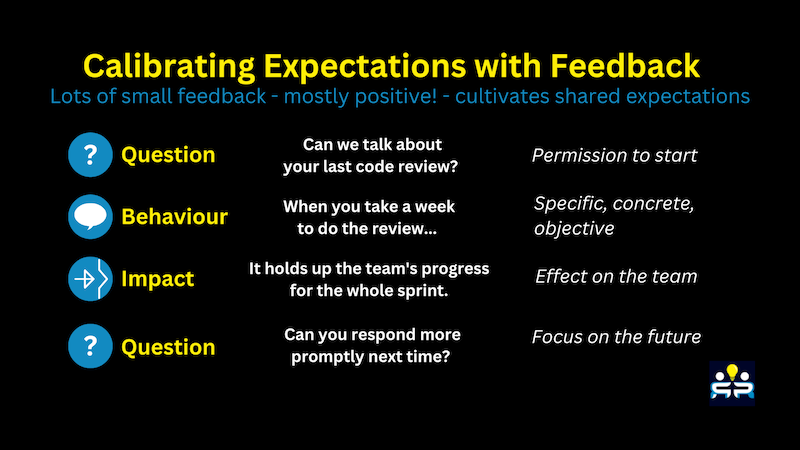

Collectively, we know good ways to give feedback that meet these requirements — managers have converged on a model that works really well (e.g. #120, Three Feedback Models). The Centre for Creative Leadership’s SBI model that Google uses, the Manager-Tools Feedback Model, and Lara Hogan’s feedback equation all follow a very similar structure:

There’s a question to open the conversation. That’s followed by a clear statement of a specific, concrete, objective behaviour and its impact, which are the core of all the models. And finally it ends with a question. Often that’s simply asking the recipient for a behaviour change (or to keep up the good work!). Other times, if you suspect there’s lack of a shared understanding of goals and priorities, or something else in the way, it can usefully be an invitation to a discussion (“what were you trying to optimize for there?”) to uncover what was going on.

There’s multiple ways to escalate feedback if it doesn’t “take.” But the vast majority of people you will ever work with want to meet expectations, whether you’re their manager or their peer, and will do so if given the chance.

That’s really all there is to it. Feedback lands better if there’s already good lines of professional communications open, such as one-on-ones. And negative feedback will feel more reasonable and fair to people who are receiving positive feedback about the things they are doing well. But developing a shared set of expectations via feedback is straightforward. Like any new practice, it can feel like a bit of a struggle to get started with, and feel unnatural at first. But it’s important, it’s worth doing, and your team members deserve to hear how they’re doing.

With that, on to the roundup!

Debugging Teams: Groundhog Day - Camille Fournier

Have you ever been on a team that seemed to work very hard but never move forward? Where you look back quarter after quarter, or perhaps year after year, and you did a lot, but nothing actually seemed to happen?

As people of science, we know the difference between speed and velocity. But from the inside of a team or organization that’s careening off madly without making consistent forward progress, it’s sometimes hard to tell them apart.

I write a lot, sometimes I think too much, about the importance of having a clear direction and prioritization, even though that makes for uncomfortable conversations sometimes. But that’s the only sustainable way for our teams to have the impact they and our research communities deserve. There are always more things that our teams could be doing than we have time to do. For our efforts to add up to anything meaningful, they have to be focussed in a consistent direction.

Three “Groundhog Day” symptoms Fournier highlights hit uncomfortably close to some of the situations I’ve seen over the years:

The solution is always just around the corner. Once we finish project X, things will be better. Once we sell to client Y, we’ll be set. Once we hire key hire Z, things will really take off. […]

Addicted to ‘snacking’. […] ‘low-effort, low-impact work’. Teams stuck in Groundhog Day mode often find themselves snacking, working on easy projects that seem like they should deliver quick wins but never actually make a difference.

A lack of metrics and accountability. […] You don’t learn anything project after project, so it’s almost as if each project doesn’t matter at all once it is completed. Without any metrics, you don’t know what is working and what isn’t working, and no one is held accountable for making sure that the work produced is actually meaningful.

The only solution to Groundhog Day, Fournier tells us, is to slow down, set a strategy that can be used to prioritize work (which means saying no to other work), understand why and how new tasks do or don’t fit into the strategy, and keep the goals in mind as the team starts to speed back up increase their velocity.

This HBR article about remote employees collaboration patterns is interesting. In a study of Zoom, Teams, and WebEx (!!) meetings from 10 large global companies, meetings between distributed team members who stayed with the company became more frequent, but also shorter, smaller (fewer attendees - by a factor of 2!), and more spontaneous.

As we’re all getting more used to remote work, we’re starting to figure out how to make it more effective, and it seems like we’re managing to reproduce some of the best parts of the in-office “hey, can we chat for a minute?” interactions.

The Incident Retrospective Ground Rules - Lex Neva, Honeycomb

Hiding Theory in Practice - Fred Hebert

Whether we’re managing projects or systems, things go wrong. And as leads and managers, how we handle things going wrong shapes our entire team. It affects how willing people are to raise issues, how willing they are to share with each other and ask questions, and how willing they are to try to improve things.

Neva describes how their first incident retrospective went at Honeycomb, starting with a meeting where the ground rules were read out (I’ve abbreviated them here):

Underlying these ground rules are some principles that have been long common elsewhere in incident response - a focus on learning versus action items, blame versus context, avoiding counterfactuals, and the importance of asking questions. We know why these are important. The past is fixed and immutable; assigning blame and otherwise making things miserable for team members accomplishes less than nothing. And hypothesizing about what might have happened in other parallel-universe pasts gets us nowhere.

Our goal is always to incrementally improve the future, and we can only do that by being genuinely open to learning about what happened. That way the entire team can learn from each other, and we can work together to make this way of things going wrong less likely in the future.

Hebert talks more explicitly about those principles, and some others (e.g. “human error” and “root cause” generally not being useful concepts). Hebert’s article is nice because he discusses what to do when talking to someone who nonetheless steers into violations of those ground rules - assigning blame, “they should have known”, etc.

Assuming the discussion is one-on-one, Hebert says trying to police the reaction or ignore it isn’t helpful:

Strong emotional reactions are as good data as any architecture diagram for your work. They can highlight important and significant dynamics about your organization. Ignoring them is ignoring potentially useful data, and may damage the trust people put in you.

This is good advice for managers and leads generally. He gives several suggestions about potentially useful places to dig in to learn more when presented with these reactions. (e.g., if this person is saying “it should have been obvious..” but it wasn’t obvious to the person they’re blaming, that “hints at a clash in their mental models, which is a great opportunity to compare and contrast them. Diverging perspectives like that are worth digging into because they can reveal a lot.”)

What can you really influence? Find out by taking a look at your Sphere of Influence - Kate Leto

A disturbingly easy way for an RCT leader to burn themselves out is by shouldering responsibility for things they can’t possibly control. (Ask me how I know!)

We’re ambitious people. We get into this line of work because we want to push forward the boundaries of human knowledge. There’s limitless places our teams’ expertise can be applied, and limited time to do so. So when we see barriers to what we know can be done, and recognize them as unreasonable, it’s pretty common to try to personally move the barriers out of the way.

The thing is, though, we can’t do everything, and in large established institutions — and especially in broad multi-institutional collaborations — a lot of things are just not within our power to control. Yes, we can slowly over time eke out change (#144, Hacking your Bureaucracy), but not all at once, and not without a lot of help.

Leto advocates for some frank acknowledgement of our various “spheres” - our spheres of control (what we actually directly change), our spheres of influence (where we can advocate, support, and help, but not directly change), and, well - everything else, the sphere of concern, where things lie that can affect us but we can’t affect. Writing down what’s in each, she argues, makes it much easier to focus attention where it can actually do some good, and to set aside what we can’t change or fix.

Take Aim: How To Reflect, Set Direction, and Make Progress In The Year Ahead - Ashley Janssen

So I’m doing advent of code again this year - a popular annual programming problem-fest.

Last year, my goal was “practice my long-out-of-date C++”. This year, my goal is “I’m really excited about where the C++ standard is going for parallel execution, but to make use of it I’d need to better understand C++20 ranges, and C++17/C++20 algorithms, so I’m going to tackle each problem using those as much as I can manage”.

Guess which one is proving more successful at helping me develop my skills?

Having a more specific goal, with a reason why I’m targeting that goal, is turning out much better at driving my skill growth. Already at day 10, my day 1 fumbling looks a little embarrassing. I suspect the day 9 and 10 solutions I’m currently so pleased with will look equally cringey by day 25. And yes, as a side effect I’m improving in other areas of C++, as well.

Janssen advocates taking a similar approach to personal goal setting for growth in the year ahead.

In our electronic and remote- or hybrid-work world, we generate a wealth of digital detritus - calendars, emails, documents - we can quickly review to remind us of the year that’s winding down. That provides us ample material for reflection.

She then advocates planting your feet in a direction, setting the direction you want to grow in, along with the reason why.

Only then does she suggest planning some processes to put into place to move you in the right direction - taking into account people who can help, and small actionable steps that can be taken to move you in the right direction.

A crucial thing she emphasizes - it’s true that the specific goal without some initial steps to get there is just wishful thinking, but it’s the goal that’s important, not the steps. As time goes on the steps can change and that’s fine. But a specific goal (including a why) and an initial plan are powerful together.

Research Application Managers: Overview - The Turing Way

I’ve mentioned Turing’s great living handbook before, which covers topics like reproducible research, project design, and more.

It also has a section on research infrastructure roles, which includes overviews of key roles like data stewards, community managers, and research software engineers.

Reader Michelle Barker, director of ReSA, emailed me to point out new kind of role listed on the Turing’ Way handbook, one that is starting to be actively discussed elsewhere: the Research Application Manager. (“Application” here is in the sense of application of new knowledge or result to a problem elsewhere, not as in a software application).

The problem that funding the role of RAM is meant to address is, quoting from the handbook:

Traditionally, academia is less interested in supporting and rewarding work on:

- Improving and extending existing research outputs/software

- Promoting interoperability of new and existing outputs/software

- Investing in usability, re-usability and user-friendliness of outputs/software (new and existing)

- Co-creating outputs with users from the early stages of the research output lifecycle

- Proactively discovering new real-world applications and use cases beyond the original academic field and investing in their promotion, adaptation and adoption

The problems RAMs are aiming to solve are ones that come up frequently in this newsletter! Back in #119 I talked about RCD research versus RCD development, and that translating research outputs into inputs in other area - moving things along the “technological readiness ladder” (#91) - is an important but underfunded responsibility.

In RCD, our mission is to scale our impact on research and beyond in our communities, while being bound to limited resources. These technology transfer (or research translation, or knowledge transfer, or knowledge mobilization — different fields use different terms) efforts can have substantial impact if they’re done well, by people who understand both the research and the communities that might find it useful.

The Turing Way handbook outlines some key measures for success in RAMs, including:

- Engaging with the research team early on in the project to bring the perspective of potential users of their software tools and to proactively co-create from the early stages

- […]

- Promoting the tools outside the academic field of the original research team

- Approaching the output as a research “product” and bringing an appropriate level of “market intelligence” to the academic team

- “Packaging” or “re-packaging” the tool to improve usability/accessibility to different audiences

As described this is a pretty creative role, requiring people who can deeply engage with researchers and with potential client communities, and can “think product” as well as direct projects. I’m pretty excited by the fact that this kind of role is being taken increasingly seriously, and I’ll be keeping an eye out for other organizations adopting it.

Have you seen other kinds of teams in your institution do this sort of work? What are such teams called, and how are they funded? How do they measure success? Drop me a line and let me know - hit reply, or email jonathan@researchcomputingteams.org.

Active knowledge in software development - Michael Feathers

Feather’s article argues that knowledge fades and goes stale. On the other hand, re-working with a piece of a code base re-activates the knowledge in the mind of the team member working on it.

The consequence of that is when we’re thinking about knowledge preservation and transfer on teams, we can usefully be thinking not just in terms of documentation but in knowledge activation - what knowledge is currently loaded into someone’s cache, and how to share that while the knowledge is active (through discussion, pair programming, or something else).

When we look at all of these qualities together, the most important thing to realize is that knowledge management should not be focused on conservation and storage. Instead, it should focus on generation and flow. Over time, knowledge dissipates, and people move from place to place. We need to concentrate on developing practices that help us rapidly acquire and generate knowledge on-demand to support the valuable work that we do in systems.

In retrospect this would have been a good article to pair with last issue’s (#148) post by Simon Willison about having a large number of projects. There, the approach was to keep work as self contained as possible, so that it was easy to pick up again. In Feathers’ language, that’s about making it easy to re-activate the knowledge.

From Zero to 50 Million Uploads per Day: Scaling Media at Canva - Robert Sharp, Jacky Chen

I always love a good migration story. Here, Sharp and Chen from Canva talk about the history of their storage solution for media content (management of stock photos and graphics that get used in graphic design work) a the company grew.

I admire how resolutely pragmatic the solutions they present have been - originally it was just a good old boring MySQL database. Eventually they added some additional tooling to make schema migrations easier, but kept the same foundation. They only started moving away from the simple approach when it was becoming truly untenable (schema migrations took six weeks!). They then implemented a simple sharding approach to stave off the worst problems, and started prototyping a number of different longer-term solutions.

Finally, once an approach was decided on, they did a staged migration, starting new writes to the new data store, then moving the old data across, before finally shutting down the old data store.

There’s a lot of advantages to what they had done. Foremost to my mind - by the time they moved to something non-boring, their data models were very mature and their usage patterns well understood, so they had a really good understanding of what they truly needed. (No one ever knows what implementations actually at the beginning of a product’s life).

In their own words, some of their biggest lessons learned:

- Be lazy. Understand your access patterns, and migrate commonly accessed data first if you can.

- Do it live. Gather as much information upfront as possible by migrating live, identifying bugs early, and learning to use and run the technology.

- Test in production. The data in production is always more interesting than in test environments, so introduce checks in production where you can.

All models are wrong, but some are useful, even though they’re very very wrong. Carnot’s absolutely foundational work on thermodynamics could not yet benefit from modern conceptions of energy. It was instead deeply informed by the bonkers “caloric” theory of heat, which posited that heat was an actual material substance called caloric which repelled itself and so flowed out of hot things. And yet that intuition produced the correct results, which in turn profoundly and positively affected the history of physics and engineering.

Using generative AI to help come up with ideas.

Really nice set of lessons on Natural Language Processing, going from nothing to quite sophisticated.

The IBM PC adopting the 8086 wasn’t a foregone conclusion. Other manufacturers, like TI and Motorola, had arguably better choices. How TI tried and failed to make it’s TMS990 the basis of the PC revolution.

Enjoy a relaxing holidays with the peace of mind that comes from having full test-suite coverage of your awk scripts (your awk scripts have test suites, right?) using goawk’s cover mode.

A Christmas tree ornament that plays Doom.

This is cool - another python ahead-of-time compiler, codon, which looks quite sophisticated, and supports parallel threaded or gpu execution.

Create commit messages using ChatGPT with commitgpt.

Quick tutorial on generating small linux distributions for embedded devices using buildroot.

How the Precision Time Protocol is being deployed at Meta.

How cross-platform container environments work.

And that’s it for another week. Let me know what you thought, or if you have anything you’d like to share about the newsletter or management. Just email me or reply to this newsletter if you get it in your inbox.

Have a great weekend, and good luck in the coming week with your research computing team,

Jonathan

Research computing - the intertwined streams of software development, systems, data management and analysis - is much more than technology. It’s teams, it’s communities, it’s product management - it’s people. It’s also one of the most important ways we can be supporting science, scholarship, and R&D today.

So research computing teams are too important to research to be managed poorly. But no one teaches us how to be effective managers and leaders in academia. We have an advantage, though - working in research collaborations have taught us the advanced management skills, but not the basics.

This newsletter focusses on providing new and experienced research computing and data managers the tools they need to be good managers without the stress, and to help their teams achieve great results and grow their careers.

This week’s new-listing highlights are below in the email edition; the full listing of 196 jobs is, as ever, available on the job board.